A participant in the International Youth Festival in Sochi, Russia, walks by an anti-Western propaganda exhibition in March 2024 that decried NATO as having a “history of deception.” The U.S. Global Engagement Center fights anti-Western propaganda efforts by the People’s Republic of China and Russia. AFP/GETTY IMAGES

THE WATCH STAFF

The Watch recently discussed with James P. Rubin, special envoy and coordinator of the U.S. Department of State’s Global Engagement Center, issues of importance to our readers. The Watch featured the Global Engagement Center in a February 2021 article, “Fighting for Truth,” but many things have changed since then.

THE WATCH: Special Envoy James Rubin, thank you for joining us today to discuss your important work at the Global Engagement Center.

RUBIN: Thank you, I’m excited to be here.

THE WATCH: With over 35 years of experience in foreign policy, what are some key milestones in your career that led you to your current role as special envoy and coordinator for the Global Engagement Center?

RUBIN: My previous work as the assistant secretary of state for public affairs and chief spokesman for Secretary of State Madeleine K. Albright was particularly formative. My direct involvement in the Balkans peace process was difficult, but the U.S. government and our NATO allies made a positive difference in the lives of so many in that region that stands to this day. Since then, I’ve been in and out of government and media but have always remained involved and in the know regarding foreign affairs. A year or so ago, Secretary [Antony] Blinken invited me back to the department to lead the Global Engagement Center in our effort against a growing threat to our national security — foreign information manipulation.

THE WATCH: What is the mission of the Global Engagement Center and how does the organization work with allies and partners?

RUBIN: Our congressionally mandated mission is “to direct, lead, synchronize, integrate, and coordinate U.S. federal government efforts to recognize, understand, expose, and counter foreign state and non-state propaganda and disinformation efforts aimed at undermining or influencing the policies, security, or stability of the United States, its allies and partner nations.” That’s a mouthful but in essence, we are the part of our government that’s ringing the alarm bell on information manipulation as a critical national security issue, and something that is being leveraged by our adversaries and competitors. Through our work, which spans across our government and those of our allies and partners, we are finding, exposing and disrupting foreign state exploitation of the open information environment abroad. We are also convening and galvanizing an international community of like-minded allies and partners to push back, collectively and forcefully, through targeted policies, capacity building and multilateral engagement.

THE WATCH: Since 2020, what has been the impact of increased propaganda and disinformation from Russia and the People’s Republic of China (PRC) on U.S. diplomatic relations and global perceptions of the U.S.?

RUBIN: I can tell you it hasn’t been good for the United States. I can’t stress enough how much our adversaries are investing in this information manipulation, disinformation and propaganda space and creating an asymmetrical advantage for themselves. I would also say that there is no U.S. foreign policy objective or goal that isn’t susceptible to foreign malign influence and information manipulation to its detriment. There is an imbalance to how the U.S. is approaching this national security threat, because where some on our side see a “communications issue,” our adversaries are treating this as information warfare, and they see their information operation’s importance, as arguably more outcome determinative than operations in the traditional physical domains of air, land, sea and space. As we used to say on the Hill, “budget is policy,” and countries resource what is deemed important, and information manipulation capability is being greatly resourced by both Russia and the PRC.

THE WATCH: Since its establishment in 2017, what has been the role of the GEC in countering the propaganda, disinformation and misinformation from Russia and the PRC?

RUBIN: The GEC has a number of important roles and functions, but I think the most critical two are 1) exposing and disrupting foreign information operations and their hidden exploitation of the open information environment, and 2) convening and galvanizing a like-minded collective to strategize and take collaborative action to push back against the purveyors of disinformation undercutting our interests abroad.

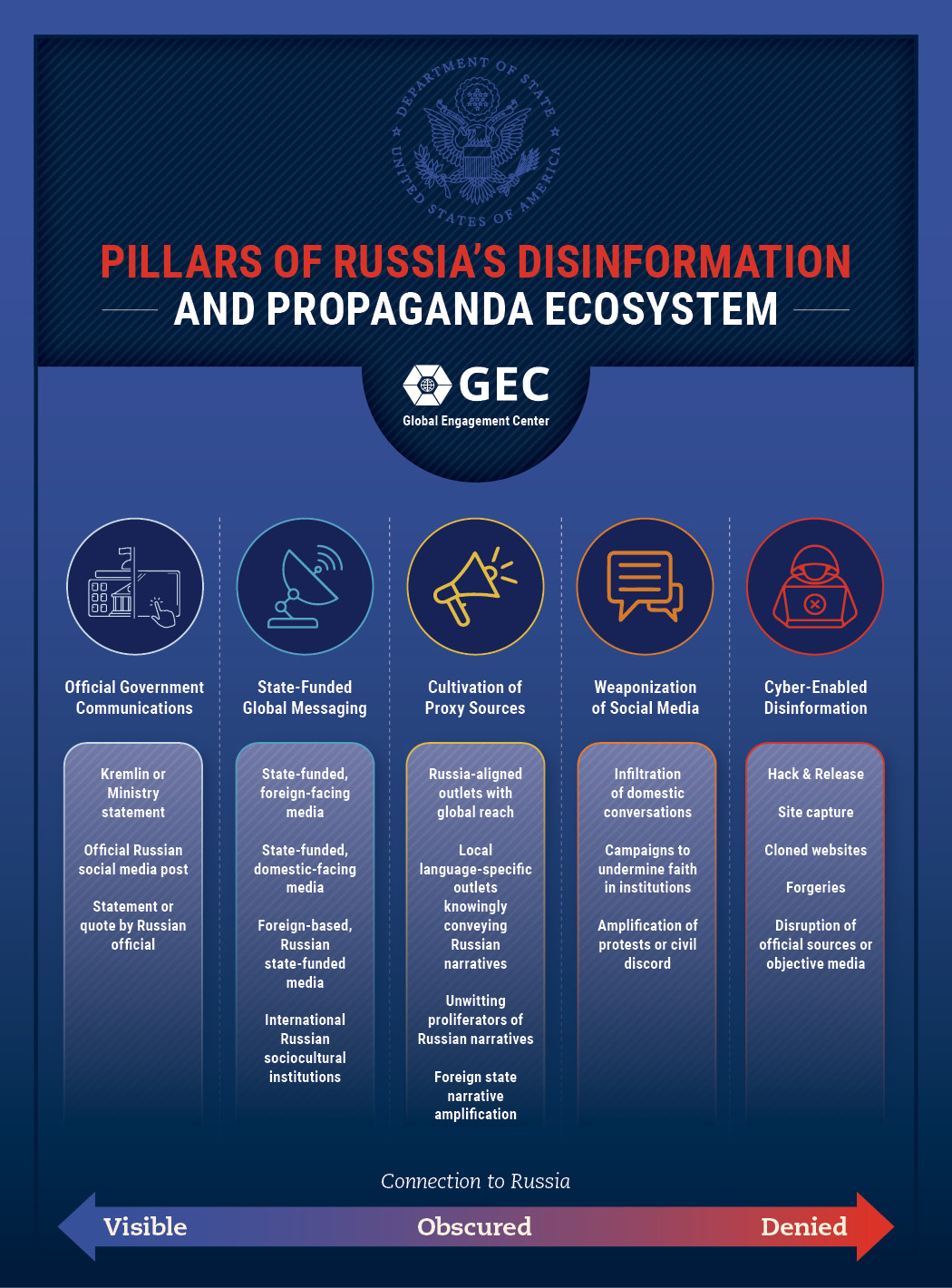

THE WATCH: The groundbreaking GEC report “Pillars of Russia’s Disinformation and Propaganda Ecosystem” in August 2020 succinctly described complex, malign Russian activity in the information environment. Have U.S. allies and partners adopted the report’s findings in their own strategies and plans to combat Russia’s propaganda and disinformation?

RUBIN: There have been many positive outcomes across U.S. and allied governments and their civil societies resulting from the public release of this report. At that time, we were able to model, map and categorize what appeared to be disparate and chaotic amounts of Russian information warfare activity on the surface into distinct but connected groupings and to publicly share that with the world. This report has equipped our partner governments, as well as the free media, to see Russia’s disinformation and propaganda ecosystem for the threat to democracy it is and how they use it to undermine democratic ideals and institutions. As just one example, this GEC report, and subsequent reporting, ultimately resulted in sanctions against media organizations that had been operating as proxies of Russian intelligence. This report has allowed our allies and us to speak with common vernacular and work collectively against our common Russian threats.

THE WATCH: The GEC published another significant report on “How the PRC seeks to Reshape the Global Information Environment” in September 2023. Can you summarize its findings on the PRC’s tactics, techniques and procedures that are used to manipulate the information environment? How does this align with the PRC’s broader strategic objectives?

RUBIN: As with our Russian pillars release, our PRC-focused report publicly lays out the U.S. government’s understanding of how the PRC does its industrial-scale information manipulation operations to the detriment of democratic ideals and freedom of expression. The report distills the PRC’s global information manipulation ecosystem into five elements, which taken together enable the PRC to gain overt and covert control of media content and platforms, suppress global freedom of expression and support an emerging community of digital authoritarians. As with our Russia report, we leveraged our coordinating role across the interagency to combine open-source information with U.S. government information to shed light on previously non-public tactics and actors in the PRC government’s information manipulation apparatus. Overall, the report argues that in addition to its longstanding focus on presenting positive views of the PRC to external audiences, Beijing is now increasingly relying on disinformation to undermine other governments, particularly the United States, and works hard to conceal the origin of its messaging by leveraging proxies such as influencers and deploying pay-for-play coverage in key regions around the world.

THE WATCH: To what extent have Russia and the PRC been coordinating their messaging efforts and meta-narratives on issues such as COVID-19, the Ukraine war, Taiwan and the Arctic to influence the global information environment?

RUBIN: At the public level, I’d describe what we’re seeing between Russia and the PRC as a mutual supporting activity where Russian narratives are seen and find their way onto PRC communications and messaging platforms and vice versa. It is a parroting or amplification of each other’s anti-U.S. or anti-West content that isn’t particularly sophisticated, but it is getting quicker. The time between the initial release by one adversary to the time it is regurgitated by the other into target language aimed at targeted audiences is getting faster. I think they each are also interested in reliable source feeds of anti-U.S. and anti-West content, and they become another reinforcing proxy for the original corrosive disinformation narratives.

THE WATCH: How will adversarial messaging from Russia and the PRC evolve over the next decade? What are the implications for global diplomatic relations?

RUBIN: Adversarial messaging, like every other facet of daily life — good and bad — is going to be recast by advancements in AI technology over the next decade. In the information space, AI has the potential to strengthen democracy by advancing resilience, openness, civic engagement and participation, and access to government services and information. But AI can also be used as a tool to undermine democracy, including through voter suppression, information manipulation and curtailment of civic engagement. One of our biggest concerns is the inevitability of our adversaries and competitors using current and emerging technologies to better obscure their authorship and dissemination of AI-generated materials to conduct influence and information operations at scale that are more convincing, precise and compelling than anything we’re seeing right now.

As it is, adversarial messaging is already evolving to be more realistic with generative AI technology. This content will threaten national security, public safety, trust, democratic institutions and human rights with greater intensity as the enabling technologies improve. The ability to identify and collectively oppose faster and farther-reaching information manipulation campaigns — and connect them to their hidden hands — also gets more challenging over time.

While access to accurate and authentic information will remain essential to the health of a democratic society and the well being of its people, a key step in reducing the risks and harms posed by AI-generated media is for all countries to share our commitment to responsible government procurement and use of AI systems.

President [Joe] Biden’s Executive Order on the Safe, Secure, and Trustworthy Development and Use of Artificial Intelligence captured the challenge very well, I think, noting that “AI holds extraordinary potential for both promise and peril.” The order added that U.S. leadership on AI and the unlocking of technology’s potential to solve our most difficult challenges will require, among other things, “investments in AI-related education, training, development, research and capacity.”

THE WATCH: How do the U.S. Department of State and the Department of Defense (DOD) currently work together to counter propaganda, disinformation and misinformation? Does combating adversarial messaging require a whole-of-government approach?

RUBIN: The Department of Defense is a great interagency partner to the GEC. It is similarly foreign focused, like the Department of State, and active around the globe protecting U.S. interests and enforcing our security commitments. I find it particularly helpful to consult with the range of DOD stakeholders, including the Office of the Secretary of Defense Policy and combatant commanders, when we take on regional work with our U.S. ambassadors and their embassy teams. We often find that they may have similar interests, objectives and supporting activities with resources they’ve already committed. It is always best to share information, coordinate efforts and harness action across government entities toward reaching our nation’s foreign policy objectives. DOD continues to be an excellent partner in that work.

THE WATCH: What is your vision for the future of the GEC and what new initiatives are you going to introduce to combat adversary propaganda and disinformation?

RUBIN: The GEC developed the Framework to Counter Foreign State Information Manipulation to 1) create a shared understanding of this threat, and 2) encourage interoperable solutions with our partners. The framework is a tool to build a global coalition of like-minded countries that are committed to working together to confront foreign disinformation. We believe that developing greater resilience to this threat is critically important since disinformation is very difficult to counteract once it takes root. So, the framework encourages a whole-of-society approach that focuses on capacity building in key areas including national policies and strategies, government institutions, human and technical capacity, civil society and multilateral engagement.

Since we launched the framework in the fall of 2023, we’ve had nine countries endorse it, including countries with more experience or resources dealing with disinformation, such as Germany and Japan, and less experienced or resourced countries such as Albania, Moldova and Northern Macedonia. The framework allows countries to evaluate their own counter-disinformation capabilities and then partner in areas where they need help. We plan to meet these needs with resources from the State Department as well as the collective tools and experience of the U.S. government and our allies and partners. The framework is a mechanism for collective action.

I was recently at the Munich Security Conference, where I held a discussion with our British, Canadian and German counterparts on foreign information manipulation as a national security threat. We each noted how countries such as Russia and the PRC are using information warfare to undermine our societies and our national security interests. We also spoke about the need for collective action, and we highlighted tools including the framework to build our shared capabilities and coordinated responses. Looking ahead, we hope to bring dozens of additional countries into this coalition to expand our outreach to areas like Sub-Saharan Africa and Latin America. Russia and the PRC are investing heavily in spreading disinformation and deceptively supporting proxies in these regions, where countries are much less equipped to deal with this threat. Ultimately, we all need to work together to strengthen our societies so that we can protect our sovereignty in the information domain in the same way we protect it in the physical domain.